It seems like everyone is talking about Serverless and AWS Lambda these days.

Serverless compute, serverless databases, serverless message orchestration, the list goes on. It’s clear that there are more and more serverless infrastructure options than ever before.

A standout service is AWS Lambda. Lambda is a function as a service (sometimes called FaaS) allowing you to “run code without worrying about servers”. This is great for all kinds of use cases ranging from one-off scripts such as database maintenance, or applications that handle millions of requests. According to Werner Vogels, the CTO of AWS, Amazon itself is relying on AWS Lambda to build internal services more than ever before.

So whats with all the fuss? Why is this technology becoming the default choice for building applications? And how did this happen so quickly?

These are some of the questions we’re going to answer in this article.

Prefer to watch YouTube videos? Check out my YouTube video on this topic below. You can also check out my course, AWS Lambda – A Practical Guide.

History of Compute and the Introduction of AWS Lambda

Before we dive into the details of how Lambda got onto the scene, lets peek back at how we got here.

Early Data Centers

One of the earliest examples of a data center is ENIAC or Electronic Numerical Integrator and Compute. Built in 1945, ENIAC was initially responsible for calculating artillery firing trajectory during WW2. The system itself took up over 1800 square feet of space in a secret military facility.

Early systems such as ENIAC were difficult and expensive to maintain. They required specialized components to operate, dozens if not hundreds of staff, and strict environment requirements in which they could run. However, the benefits were profound in an era where much computation was still being performed on pen and paper. Throughout the second half of the 20th century, the usage of centralized computing infrastructure (i.e. data centers) continued to grow. Used in a wide variety of applications such as telecommunication systems, Apollo missions, and more, the data center was a useful stepping stone in modern compute infrastructure.

Fast forward to the early 2000s. In this era, the need for mass internet connectivity began to emerge. You may remember this era as the “dotcom” era where seemingly every company wanted to get online. Companies like Amazon were founded in 1994 and required mass compute infrastructure to build out their online book store.

At this point, many businesses were resorting to on-premise infrastructure. In other words, businesses would buy and provision their own computer hardware and host their application servers in house. This method of computing required long lead times to order hardware, receive it, and provision it so that it would be ready for production.

You could imagine the developer experience at this time. You may realize your business traffic is increasing and you need additional hardware. But to get it, it would require cost justification, hardware ordering, setup, provisioning – quite a few number of steps between realizing you need it and actually deploying it.

The consequences of on-premise hosting were profound in terms of long lead times and costs associated with maintenance. It wasn’t until the early 2000s that companies began offering “servers for rent” that things began to change.

AWS Introduces EC2 in 2006

Amazon was one of the first companies to realize there was a significant opportunity in the infrastructure as a service model. EC2 was launched in 2006 and allowed developers to rent virtual machines from Amazon without having to provision them themselves. These servers would sit in Amazon secured data centers and be accessible remotely for customers. Developers could use these servers to host web applications, set up clusters for fluid simulations, and a whole bunch of other use cases.

The pay by the hour cost model was an attractive proposition in an era where previously, order to installation for hardware could take weeks if not months. EC2 rapidly gained popularity among developers. Little did AWS know, the launch of EC2 would begin a computing revolution and multi-billion dollar industry we now call Cloud Computing.

The Woes of a Developer Using EC2

EC2 was (and still is) and extremely popular AWS service. In fact, it is the backbone of other services built by AWS that require general compute infrastructure. One of the main downsides of EC2 is inherent to the product itself – you are managing SERVERS – and servers can be complicated.

Server management is not necessarily an easy task whether it be virtual or physical. As an EC2 customer, application owners are still responsible for things like security, networking setup, autoscaling, and a whole bunch of other factors. This is time consuming and takes away from developers (and companies) spending their time building products that actually add value.

The frustration of setting up and maintaining EC2 infrastructure created a need for another level abstraction. Customers, whether they realized it at the time or not, wanted all the benefits of cloud managed compute capabilities without the cost of setup, security, scaling, and maintenance.

In the same way AWS revolutionized an industry by making EC2 machines available to the masses, they chose to add a new level of abstraction that would simplify the developer experience. AWS Lambda was born.

AWS Lambda was Born

In 2014, a new era of computing began with the launch of AWS Lambda.

AWS Lambda is a serverless compute platform. It’s main attraction is that it allows developers to run application code without having to worry about provisioning or managing servers. The actual running of your code is completely abstracted away from you as the user.

Behind the scenes, AWS worries about the infrastructure. They manage the data center, the hardware that your code runs on, scaling of your code, and so on. Users are able to use AWS lambda for small one-off workloads such as running a periodic cron job, to running full scale event driven workloads such as APIs or data processing.

The attractiveness of Lambda goes beyond saving yourself from the headache of hardware management. It also has much to do with the pricing model Lambda offers.

In a traditional compute model using EC2, developers pay for the instances by the hour even when your instance is not being used, or is being used but not fully utilized.

Lambda changes the game in terms of how it bills customers for usage. It uses a pay per invocation model where users are charged based on a couple of key factors:

- The number of invocations

- The duration of invocations

- The amount of memory you provision for the invocation

You can learn more about the pricing structure here. But as a quick example, assume you have an application that performs 10 million invocations, provisions 256MB of memory, and the runtime lasts for approximately 200ms. In this example, you would only pay about $10 per month.

The value proposition in terms of cost is pretty clear. Better yet, you never need to worry about maintaining servers. Instead, you write Functions. Functions are the units in which Lambda operates. Functions contain your code and all the dependencies you require to run that code. Lambda is responsible for loading your function code onto an available server, and executing it in response to a request. This entire action happens completely transparently to you as the user.

Lets double click on the idea of creating functions to understand a typical Lambda creation workflow.

Lambda Workflow

The typical Lambda user workflow involves 3 important steps:

Create, Upload, Run

To get started using Lambda, the first step is to create our function. This can be done either directly in the AWS console, via the CLI, or using Infrastructure as Code solutions like CDK or Cloudformation.

A requirement for creating our function is uploading our function code itself. Lambda supports many popular programming languages including Python, Javascript, Java, Go, C#, and more.

There are a whole bunch of other options that you can specify including function permissions, concurrency, and integration with other AWS services. But for all intensive purposes, this is all you really need to do to create your function.

From there, you’re ready to run your function. When you click run, Lambda will load your uploaded code onto a AWS managed application server, run the code, and return the response. All of this happens in a fraction of a second (usually) and is completely transparent to you as the user. You have no insight into which server the code was run on and where it exists in the physical world (beyond the region). Pretty neat!

You may start to realize the numerous benefits the Lambda model offers. But lets dig a bit deeper into more about why AWS Lambda is so useful and popular.

Why is AWS Lambda So Useful and Popular?

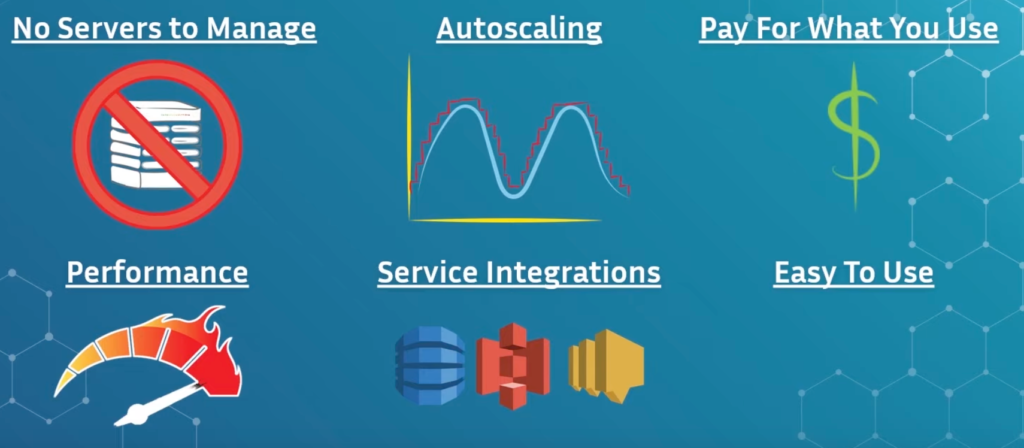

No Servers to Manage

One of the main attractions of using AWS Lambda is that there are no servers to manage. This has benefits for developers since you don’t need to worry about things like security, certificate setups, or other frustrating yet common tasks.

In addition, it also means that your organization does not need to have folks specially trained in sys ops to maintain the physical hardware. Users get to leverage AWS’ existing top tier infrastructure for free.

Autoscaling

A major benefit of using Lambda is its capability to auto-scale. Autoscaling is the ability for an infrastructure component to handle increasing load. In the past, developers would need to monitor their EC2 instances and incoming traffic rates, and set up scaling policies that would add more instances. This approach certainly worked but was cumbersome to set up and monitor.

Lambda on the other hand supports completely automatic and transparent autoscaling. For example, if you were to create a Lambda function that hosts an API and you receive a burst of requests, Lambda would automatically scale itself up to handle them concurrently. More specifically, it would launch your code onto more underlying containers to support the increased workload.

In my personal experience, Lambda can scale really really well. I’ve seen applications being run on Lambda that perform over 4 thousands requests per second. Hopefully this gives you an idea of what Lambda is capable of.

Pay for What You Use

The pay for what you use model is an attractive value proposition for most developers and companies. Not having to make long term decisions such as ordering hardware or paying by the hour is a welcome change.

In addition, Lambda is also included in the AWS free tier and allows you to use 1 million requests per month for FREE! You can learn more on free tier in this article.

Performance

Lambda performance is top notch. Typically, developers won’t even realize their code is being provisioned onto containers “just in time” before being executed. This concept is known as cold start. I.e. Lambda is “cold” and needs to launch your instance onto a container before executing the code. Note that it also will keep that container alive for a period of time afterwards to ensure subsequent requests are met with minimal latency.

The cold start problem is typically only a real issue if your application requires very tight and predictable latency – however it can be solved with a feature called Provisioned Concurrency. For applications such as event processing, this isn’t much of an issue.

Service Integrations

This is probably one of my favourite reasons for using AWS Lambda – service integrations. Natively, Lambda is able to be integrated with many AWS services to offer very powerful functionality. Here are a couple of integration examples:

- SQS – Lambda will poll your SQS queue and process messages delivered to it. It will scale up when there are many messages to be processed to increase concurrency.

- API Gateway – Lambda will integrate with API gateway to be the compute layer for your REST APIs.

- S3 – You can configure your function to be invoked when a user uploads or modifies a file in S3. This is great for use cases such as image processing, facial recognition, and many others.

- DynamoDB – You can link your function to DynamoDB such that any time a insert, update, or delete event occurs on your table, your Lambda will be invoked. The invocation contains the records that were changed. Very powerful for creating real time dashboards and change detection use cases.

- Cloudwatch Events – You can set up cloud based CRON jobs that trigger your function periodically to perform a job like a database update or snapshot.

This is a small subset of Lambda’s integration capabilities but gives you an idea of what’s possible with the service.

Easy to Use

Lambda is a very powerful service that has a ton of potential. But beyond that it is comically easy to set up and get running. I see Lambda as a service that is easy to get started with but challenging to master. There is a ton of configuration you can do with your function such as adding layers, elastic block storage, concurrency, and others. These are not mandatory for typical usage but give you the flexibility to use Lambda for a variety of different use cases.

Who Else Uses Lambda?

It’s no secret that internally AWS and Amazon heavily rely on the Lambda service for their applications. Werner Vogels, the CTO of AWS, made this clear in a recent re:invent speech.

However there are many other popular companies leveraging Lambda for their workloads including some of the name brands below.

This is just a small sample of companies that I was able to collect. But I can tell you from first hand experience that Lambda is a rapidly growing AWS service that is more often than not becoming the default compute option for developers.

Wrap Up

In this article, I’ve laid out some of the history of computing and how we got from primitive data centers to abstract computing using AWS Lambda. The benefits of using Lambda are numerous and clear including ease of use, scaling, cost, and the wide variety of integrations with other AWS services. Many developers are opting to use Lambda for workloads as a default choice, and the service is becoming more and more popular as time goes on.

I hope you enjoyed this article, and if you’re interested in learning more about AWS Lambda, check out these articles below.