Are you wondering what are the projects you can do to start your learning journey in AWS? Well, you are in the right place! This article will list some projects that you can do to get yourself familiar with some of the most used and known services of AWS.

Are you new to cloud or to AWS and want to know the fundamentals of cloud computing? You can start with AWS Cloud Practitioner course offered by AWS. Doing this will get you familiar with cloud concepts, core services offered by AWS, and the terminology along with foundational understanding of the platform itself. For more learning resources, click here.

Once familiar with AWS services, you’ll want to dig into some projects you can work on to develop practical knowledge.

The project use-cases listed below are formed from different real-life scenarios implemented on AWS across various industries.

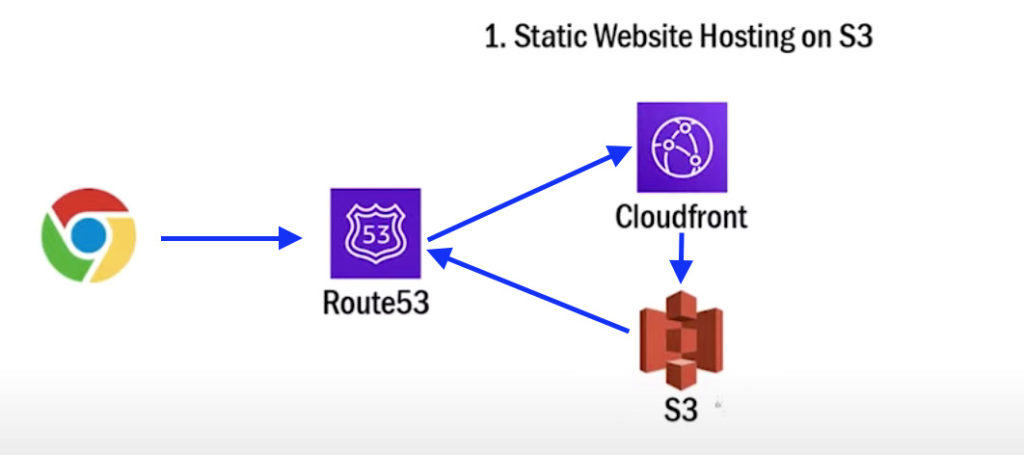

Project #1: Static Website Hosting

Hosting a static website using Amazon S3. If you are new to AWS, this project is the most common and highly recommended for you to get started.

AWS services involved

- Simple Storage Service | S3 (Object Storage service; all-purpose data store for storing raw objects of variety of different types and sizes)

- Route 53 (Cloud Domain Name System web service; helps to route end-users to internet applications hosted using AWS services or externally)

- CloudFront (Content delivery network service; helps cache and distribute content quickly and reliably with low latency)

Perquisites

- Static website (An index.html file for the website which is publicly accessible)

- AWS account and IAM user with necessary permissions

Development

- Upload the index.html file for your website to S3.

- Use Route 53 to edit DNS settings for your AWS account to setup a custom domain name and map it to your index.html file in S3. This way, if someone is trying to access your website then all they need to use is custom domain name created for your website. The Route 53 service will resolve this domain name entered to the S3 file uploaded for website.

- In addition to this, using CloudFront when hosting a static website that does not change that often can prove very cost effective. CloudFront will synchronize its data with S3 and deploy the file to different edge nodes that are located across the world for optimal performance.

- Re-configure Route 53 to direct the end-users to CloudFront instead of going directly to S3.

To summarize, you will have to upload the index.html file for your website to S3 and use CloudFront to cache, synchronize and distribute data in S3 to all edge nodes. Finally setting up and mapping custom domain using Route 53 that will direct all traffic to your website to website stored in S3.

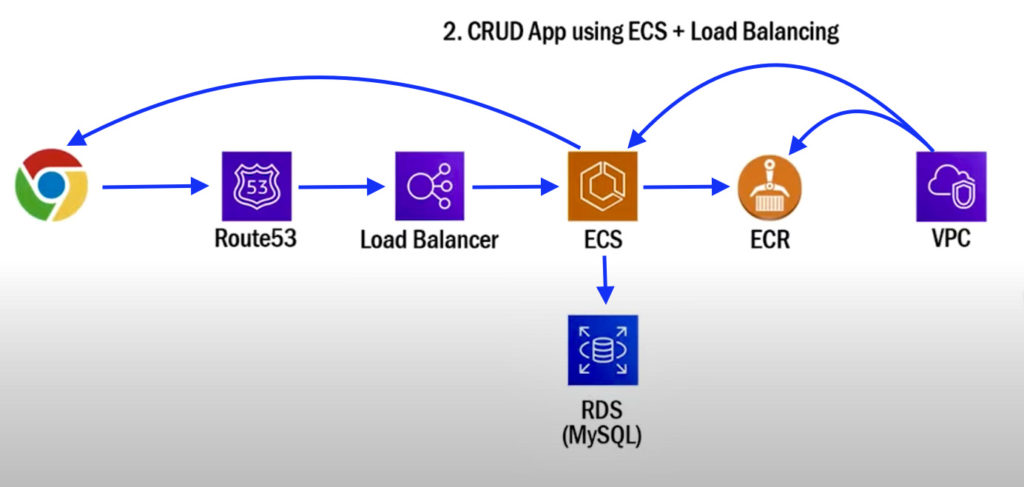

Project #2: CRUD App using ECS + Load Balancing

Hosting a CRUD (Create, Update or Delete) application code using docker image on AWS ECS and balancing incoming traffic using Load Balancer. This is a very common setup that is used for applications requiring high scalability. Further, ECS lets you easily add Docker containers with minimal effort.

AWS services involved

- Elastic Container Service | ECS (Container orchestration service; fully managed service used for deploying, managing, and scaling containerized applications)

- Elastic Container Registry | ECR (Docker container registry; fully managed registry that allows you to store, share and deploy container images)

- Virtual Private Cloud | VPC (Allows you to logically isolate your resources in the virtual network you define, basically shielding your resources from unwanted internal or external access)

- Load Balancer (Used to automatically distribute incoming traffic to your application across multiple targets)

- Route 53 (Cloud Domain Name System web service; helps to route end-users to internet applications hosted using AWS services or externally)

- Relational Database Service | RDS (Cloud database service; open source; allows you to easily operate and scale your relational databases in the cloud)

Perquisites

- Docker Container Image with application code that interacts with RDS

- AWS account and IAM user with necessary permissions

Development

- Upload your docker container image to ECR.

- Now, point your ECS service to the docker image uploaded to ECR. Setup an ECS cluster using this image and put it within desired VPC.

- Here, there are 2 options to run ECS – Serverless way using Fargate and non-serverless way using EC2. Specifically for this example, try to use Fargate to avoid complications related to VPC networking and permissions.

- Next, setup RDS database with MySQL (video link or embed video).

- From here on, setup a Load Balancer and point it to your ECS Cluster to distribute load across multiple containers that you are hosting.

- Create a Route 53 DNS entry and configure it to map your domain name to your Load Balancer.

- By doing this, if someone is trying to access your application, the traffic will be first redirected to Route 53. And then eventually, to Load Balancer which will be distributing the request to one of the containers on ECS.

- Furthermore, since your application code is written to interact with RDS, it will go to RDS, execute the command (whether it be retrieving your data, updating, or deleting it) and return the results to the caller.

To summarize, have an application code written to interact with AWS RDS with MySQL. Create docker image of your code and upload it to ECR. Deploy ECS Cluster in specific VPC using the docker image in ECR. Setup Route 53 and direct the incoming traffic for ECS cluster to Load Balancer, which will distribute the load across containers running in ECS.

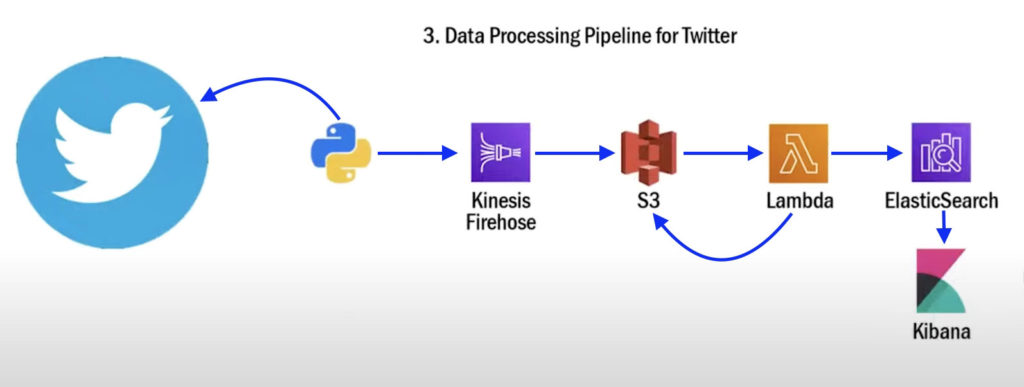

Project #3: Data Processing Pipeline for Twitter

Building a pipeline to consume Twitter streams/tweets from an endpoint where you can read off live streaming tweets.

AWS services involved

- Kinesis Firehose (ETL service; helps capture, transform, and deliver streaming data from sources to data lakes)

- Simple Storage Service | S3 (Object Storage service; all-purpose data store for storing raw objects of variety of different types and sizes)

- Lambda (Serverless and event-driven compute service that allows you to run your code for your application)

- ElasticSearch | ES (Managed service; search and analytics engine)

- Kibana integrated with ES (Data visualization and exploration tool)

Perquisites

- A developer account setup to access Twitter endpoint and an API key to get live streaming tweets

- Python script that is using this API key to consume data

- AWS account and IAM user with necessary permissions

Development

- Configure Kinesis Firehose to combine data into one file and dump it to S3.

- The data can be combined when specific amount of data is received or at specific interval. In this case, let’s do it on 5 MB worth of data or on all data received every 5 minutes, whichever comes first.

- Setup a PUT notification on the S3 bucket that stores this combined data, which will ultimately trigger the Lambda function. In short, this Lambda function will be invoked every time a file is uploaded to S3, with the payload parameters containing location and name of the file uploaded to S3.

- The Lambda function will be pulling the entire content of the file uploaded to S3 and index it in ElasticSearch.

- In the end, you can use pre-installed Kibana on ElasticSearch to create dashboards to observe and analyze your data.

To summarize, there is a python script that runs and grabs live streaming Twitter data which is plugged into Kinesis Firehose. Kinesis Firehose is setup to combine all data every 5 mins or 5 MB worth of data into one file and store it in S3 data lake. That S3 is setup with PUT notification that invokes a Lambda function with details of the newly uploaded file. The Lambda function executes the code, reading the data from S3, indexing it, and loading it into ElasticSearch. You can also use Kibana on ElasticSearch to analyze the indexed data.

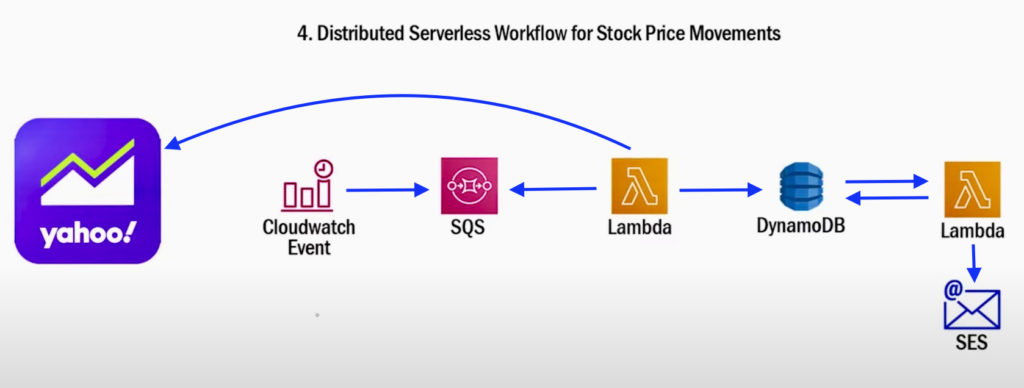

Project #4: Distributed Serverless Workflow for Stock Price Movements

Create a distributed serverless workflow to automatically detect fluctuation in stock prices and send out notification alerts for so.

AWS services involved

- CloudWatch Event (Allows to setup rules to execute target functions on schedule or trigger them on matched events)

- Simple Queue Service | SQS (Message queuing service; enables you to put jobs in queue and invoke respective application)

- Lambda (Serverless and event-driven compute service that allows you to run your code for your application)

- DynamoDB (NoSQL database; fully managed; serverless)

- Simple Email Service | SES (Email service; enables to send out email from within any application)

Perquisites

- Code to read data from Yahoo Finance API

- AWS account and IAM user with necessary permissions

Development

- Create a Lambda function with the code to read data from Yahoo Finance API.

- Setup CloudWatch Event Rule to run on one-minute interval and the target for this rule will be SQS queue.

- Whenever this event rule is triggered, it will send out notifications to SQS.

- As a result, anytime a message gets put into SQS queue, it will subsequently invoke and execute our Lambda function.

- Once we get the data, we can save and index it in DynamoDB database. For every minute, this process will be ingesting one row for each stock into the database.

- You can even use DynamoDB Streams to detect changes events in data. When a new row is inserted for the stock, we can look up the difference in price in DynamoDB using another Lambda function.

- If the difference is significant enough or more than set threshold, send out a custom email or text notification through SES.

To summarize, there is a Lambda function to read Yahoo Finance data when triggered by SQS queue. Notifications in this SQS queue is written by CloudWatch Event rule that runs every minute. Read the data, index and save it into DynamoDB database. There is another Lambda function computing difference in prices by reading latest and previous record found in DynamoDB. Send out notifications using SES when latest price pulled for the stock is significantly different or crosses certain threshold.

Conclusion

Hope you try them all and play around!

We will be creating videos for each project listed in this article. So, subscribe to this YouTube channel and keep an eye for those videos coming soon!

You may be interested in…

The Most Important AWS Core Services

AWS S3 Core Concepts – The Things You Need To Know

AWS Lambda Event Filter with SQS Setup Tutorial

What Is AWS Lambda Event Filter and How Does It Work?

SQL or NoSQL For a New Project?

S3 Static Hosting – https://youtu.be/mls8tiiI3uc

Flask based Docker Application with Aurora Serverless – https://youtu.be/NM4Vd7fpZWk

Stock Tracking App – https://youtu.be/XoMSzGybxZg