What does an Cloudwatch alarm in Insufficient Data state mean?

Before we can understand why an alarm would be in Insufficient Data state, we first need to understand three factors about how alarm state is determined:

- The available configuration parameters on any alarm (mostly time period and datapoints to alarm).

- Whether the data you’re tracking is period driven or event driven.

- Whether your datapoints are breaching or non-breaching.

Lets quickly overview these concepts now.

Time Period and Datapoints To Alarm

The first thing we need to remind ourselves is about how time periods work. Time periods are fixed-window temporal periods that are non-overlapping. You can select from multiple different available time periods like 1min, 5min, 1 hour , etc. You can even go as low as 10 seconds provided the metric supports this granularity.

Time periods start at the top of the hour and are non-stacking.

Note that there are generally two categories of metrics: period driven and event driven.

Period Driven metrics corresponds to datapoints that are emitted at regularly scheduled intervals. Something like % CPU Utilization or % Disk Capacity fall into this category.

For every time period, cloudwatch will emit a single datapoint indicating the percentage. This means that generally you do not need to worry about the ‘Insufficient Data’ alarm state since your metric is ALWAYS being emitted, and therefore insufficient data is impossible (except in certain rare circumstances).

Event Driven metrics correspond to instances of occurences in an application. Errors is a good example of this. We only send error metrics to cloudwatch when an error occurs occurs. This type of metric is much more prone to the Insufficient Data problem since there can be long periods of no invocations, and therefore no errors.

One important thing to remember is that if there aren’t any error events during a time period, then AWS considers the result as an empty or null rather than zero. This plays an important role in how Insufficient Data scenarios can come to be.

Breaching and Non-Breaching

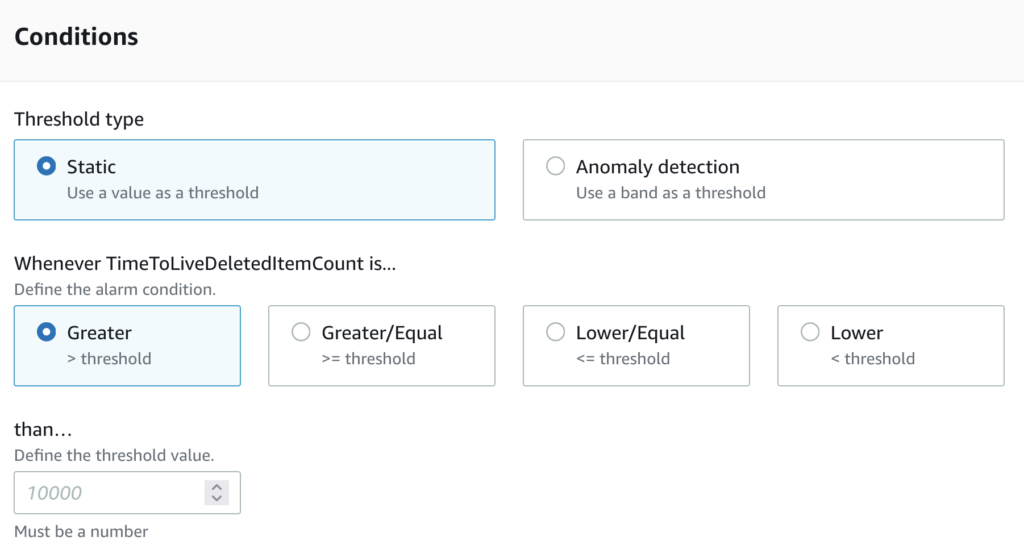

When we create an alarm, we have the ability to set a breaching threshold and an operator. For example, if we wanted to alarm whenever we have 5 or more errors in 15 minutes, we would set our breaching threshold to 5 and our operation to greater than.

For the time period being evaluated, there is a single data point (which can consist of multiple individual events). If the value is greater the threshold (using the same example as above), it would be considered to be breaching. If it is below the threshold, it isn’t.

Remember, breaching only occurs when a datapoint is being evaluated, and cannot occur when there is no datapoint at all.

Now What Does Insufficient Data Mean?

Generally, Insufficient Data can occur in the following 3 scenarios. It turns out 1 and 2 are closely related so I explained them together in the section below.

- There are not enough datapoints to evaluate the alarm

- The alarm was just created (and thus results in #1)

- Incorrect alarm configuration

Lets run through these scenarios now

Not Enough Datapoints & Recent Alarm Creation

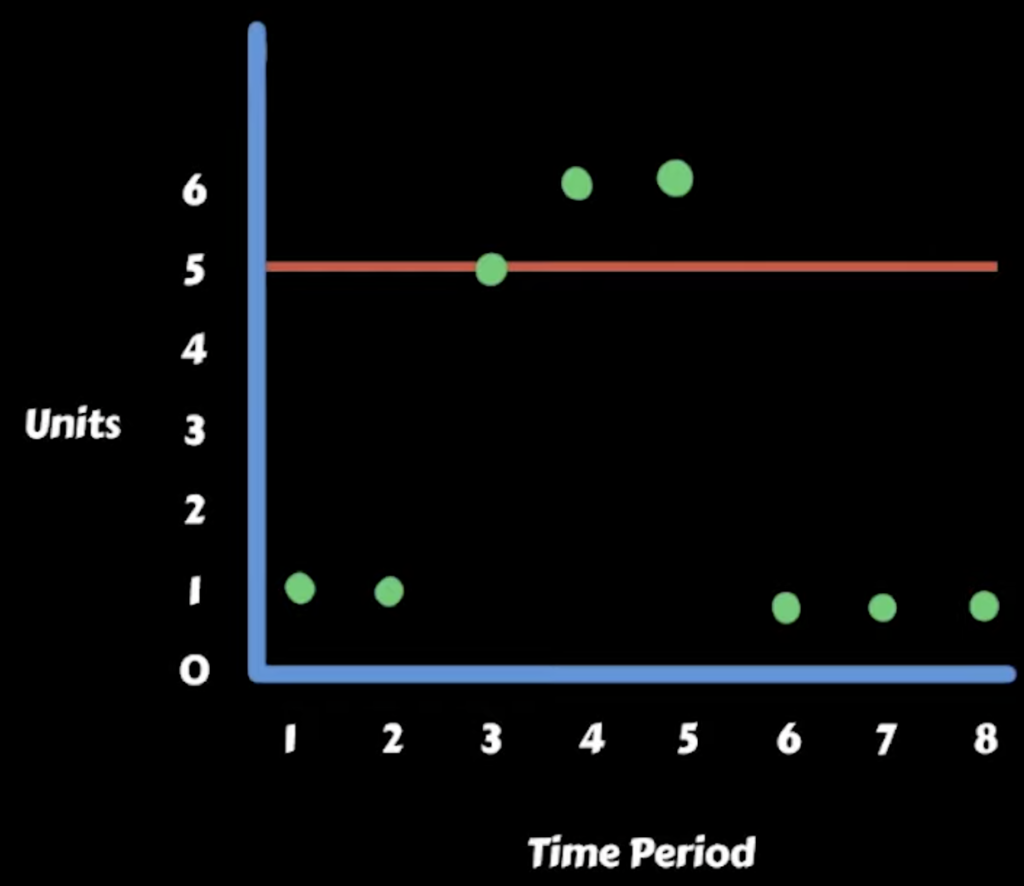

Say we set up an alarm that alarms if 3 datapoints out of 5 are breaching a threshold. Also assume we’re looking at a event driven metric such as invocation errors. Lastly, the period or time-unit we are using for the alarm is 1 minute intervals.

Now assume we have the following distribution of errors at the respective times:

- Minute 1 – No Data (No invocations, thus no errors)

- Minute 2 – No Data (No invocations, thus no errors)

- Minute 3 – Breaching

- Minute 4 – No Data (No invocations, thus no errors)

- Minute 5 – No Data (No invocations, thus no errors)

In this example, the alarm would be put in INSUFFICIENT DATA state simply because there is not enough data to make a successful evaluation. More specifically, we are looking for atleast 3 of the datapoints out of 5 to be breaching. Since we only have 1, we evaluation cannot make a conclusion, and we are stuck with insufficient data.

Note that this behaves quite differently for period driven metrics. In such case, you will have a datapoint for every time period, and thus the only time you can have a insufficient data scenario is when the alarm or resource is initially created and not enough datapoints have been populated yet.

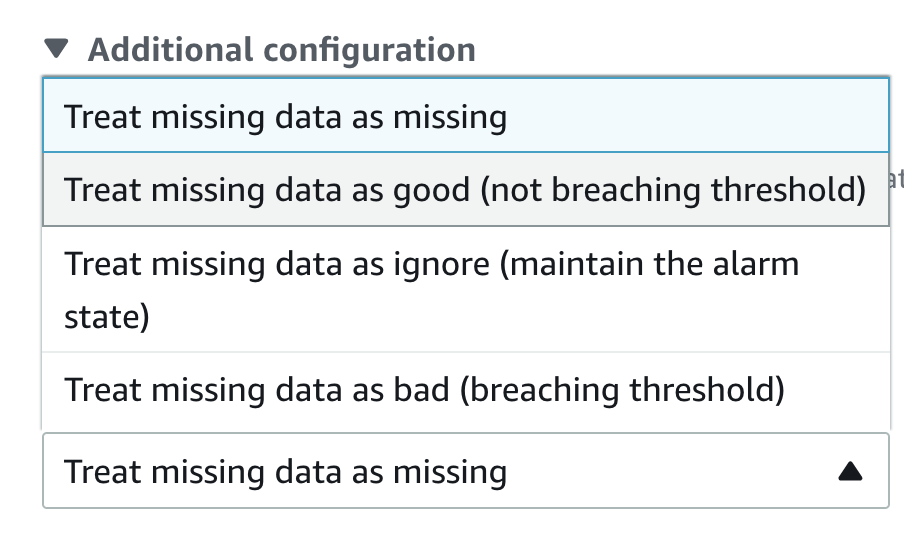

Do note that you have the option to tell cloudwatch how to handle missing data. You can use this feature to treat missing data as missing, not breaching, breaching, or maintain. The option you select can help you fine tune the behaviour of your alarm in the insufficient data scenario. The options available via the AWS console are given below.

Incorrect Alarm Configuration

When select a time period granularity for an alarm, you have many options available to you with the lowest value being 10 seconds! Unfortunately, this can be a little bit misleading. AWS allows you to select any granularity you wish, but most metrics have a minimum of 1 minute granularity.

That means if you select 10 seconds as the time period for your alarm but the metric only supports 1 minute, Cloudwatch will never collect any data at this granularity and your alarm will be in a permanent Insufficient Data state.

Summary

Insufficient data can occur for a variety of different reasons. It is mostly prevalent for event driven data and not period driven data which consistently emits metrics. In the case of event driven, insufficient data occurs usually during initial alarm/resource creation while metrics are being propogated to cloudwatch, or as the result of not enough datapoints being present (null data) in an evaluation period to make a conclusion.