AWS Lambda’s launch in late 2014 marked a turning point in how developers think about and use compute infrastructure. Prior to Lambda, the default choice for compute infrastructure was Elastic Compute Cloud (EC2). Despite being flexible, using EC2 came with its fair share of headaches: painful maintenance, security concerns, and of course the most important–cost.

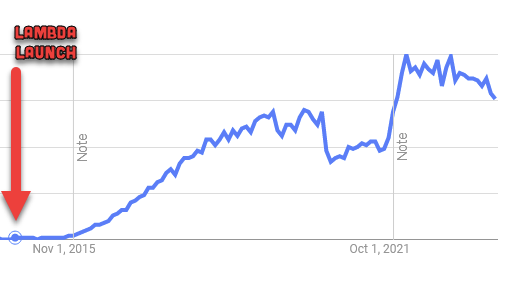

Lambda’s introduction of the “pay for what you use” and “scale down to zero” model quickly lured developers away from their hard metal setups and onto managed compute infrastructure. It’s around this time that the term “Serverless” really began to pick up steam, as the Google Search trend graph indicates below.

Since then, developers have come to a common understanding of what the term Serverless means. Most think of it in terms of how we see AWS Lambda where we are metered based on usage of the actual service (function invocations), and not charged for idle time. But this community understanding does not seem to align with what AWS seems to think Serverless means.

Recent product launches like Aurora Serverles, Redshift Serverless, and OpenSearch Serverless use a cost model that deviates from the one AWS Lambda uses–specifically with regards to minimum monthly cost. With Lambda, you are charged zero dollars if you deploy a function and never invoke it. This is not the case with the new Aurora, Redshift, and Opensearch Serverless variants. In fact, with these services, the minimum cost that you must spend even when the service is not in use can be pretty shocking. It’s this disconnect that is a cause for concern.

Now let’s look at how “Serverless” pricing works across three different AWS services: Aurora, Redshift, and OpenSearch.

Aurora Serverless

Aurora Serverless is a bit of an interesting case mainly due to its historic product development. The original Aurora Serverless variant (V1) of supported scale down to zero behaviour. This means that when the database is not in use for a prolonged period, or due to manual scheduling, it would scale all the way down and you wouldn’t be charged a dime. When activity resumed, you would start getting billed—no complaints here.

Aurora Serverless V2 was launched in April 2022 and departed from this behaviour. With V2, you must set a minimum number of Aurora Capacity Units (ACUs) when setting up your cluster. These capacity units represent the hardware characteristics of the underlying compute node running your database–the more units, the more CPU and memory your machine is given.

Unfortunately, the lowest number you can set your Aurora Serverless V2 database to is 0.5 units. This translates to a minimum spend of $44.83 per month. I wish I could say this is the worst example, but its far from it. Let’s look at two more..

Redshift Serverless

Redshift Serverless is a more egregious example of abusing the Serverless term, but it’s by far not the worst culprit.

With Redshift, I used the AWS cost calculator to specify a “Small Workload” and selected the minimum number of Redshift Processing Units (RPUs) which is 8. I also selected 1 hour of daily runtime which is the lowest number you can pick.

The total cost for this? $87.84 per month. If you bump up the usage to 2 hours daily, the cost increases to approximately $190. Not terrible considering how much the provisioned mode of Redshift costs, but still a pretty large number.

OpenSearch Severless

By far the most shocking culprit is OpenSearch Serverless. With this setup, you need to specify two parameters: 1) the number of indexing OpenSearch Capacity Units (OCUs), and 2) the number of Search & Query OCUs. The minimum number of units you can specify is two for each, meaning that the total minimum required is 4 OpenShift OCUs.

The total cost for this setup? A whopping $700.82 per month.

What Comes Next?

It’s unfortunate to see how overloaded the term Serverless has become. In recent years, I tend to cringe any time I hear Andy Jassy or Selipsky get on stage at re:invent and announce a new “Serverless” service, knowing full well what it will probably entail.

The bottom line here is that the term Serverless has a clear connotation associated with it—scale down to zero when not in use and pay for what you use. Recent product launches are using this “Serverless” branding, but have costs associated with them even when the services are not in use. In my opinion, this is unacceptable and blatant abuse of what the term Serverless implies.

In my opinion, I think it’s time for AWS to think carefully of what qualifies as Serverless. Maybe pick a term like Managed Capacity or something–anything that implies there’s a minimum cost spend for when the service is not in use.