Introduction

In this blog post, you’ll learn how to set up an S3 trigger that will invoke a Lambda function in response to a file uploaded into an S3 bucket. We will start from scratch, and I will guide you through the process step-by-step using the AWS console.

As a small pre-requisite, you’ll need an S3 bucket. In this tutorial, I’ll be using my bucket called called bbd-s3-trigger-demo as seen below.

Our first step is to create a Lambda function. Second we’ll create the trigger that invokes our function on file upload. Finally we’ll add some code to our Lambda function that will download the file from S3 and print out it’s contents in response to being called.

So let’s get started.

Create Our Lambda Function

To create the Lambda function, go to the AWS Lambda console and click “Create Function.” In my case, I named my function bbd-s3-trigger-demo and I’m using Python 3.9.

When the Lambda function gets triggered with an S3 file update or creation notification, we want the Lambda function to call back into S3 and retrieve the file.This requires the Lambda function have the s3::GetObject permission to access and retrieve that file. In this tutorial, we’re going to use a IAM role AWS has provided that grants us this permission. If you’re creating your function through infrasatructure as code such as in CloudFormation or CDK, you’ll want to grant it this permission explicitly.

Since we’re doing this in the console, we’ll want to click “Change default execution role” -> “Create a new role from AWS policy template,” and then selecting “Amazon S3 object read-only permissions” as seen below.

Go ahead and click Create Function to proceed.

Now that we have our Lambda Function set up, our next step is to create the trigger in S3 that will invoke our function on file creation/update.

Create the S3 Trigger

Click “Add trigger” as seen below and select S3.

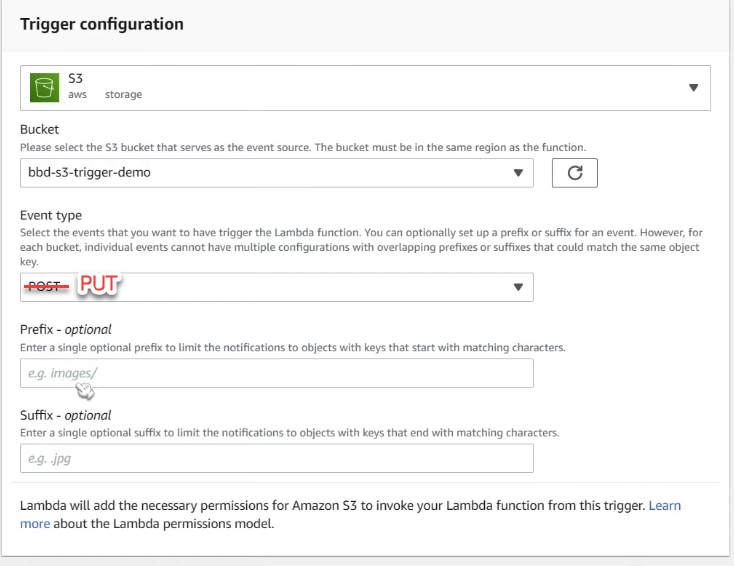

Next, select the bucket bbd-s3-trigger-demo and the event type. If files are uploaded through the SDK or the AWS console, the event type should be PUT, not POST.

Optionally, we can add a prefix so that the trigger will only get invoked if files get uploaded to a specific subfolder within the bucket. Similarly, you can limit which files trigger a notification based on the suffix or file type.

Finally, we need to modify our Lambda to understand the notification event.

Modify Our Code

Note that the whole code snippet is available at the bottom of this article.

First, we’ll need to import some dependencies

import boto3

import csv

import iojsonboto3: the python AWS sdkcsv: to process our csv fileio: to handle input / output as we read from our S3 response

Extract the Bucket name and the Key Name

Next, we initialize the s3Client variable, a boto3 client for S3. This is what we’re going to be using to call our GetObject API with our bucket.

s3Client = boto3.client('s3')Get the Object from S3

After initializing s3Client, we need to extract the bucket name and the key name (file name) from the event. Once we’ve got these values, we can get the object from the S3 bucket via the get_object call.

def lambda_handler(event, context):

#Get our bucket and file name

bucket = event['Records'][0]['s3']['bucket']['name']

key = event['Records'][0]['s3']['object']['key']

#Get our object

response = s3Client.get_object(Bucket=bucket, Key=key)Finally, we need to process the CSV file. First, we get the file’s data from the response of the get_object call and decode that content into utf-8. Then, we parse it using the csv.reader library.

We’re using the io library to parse the string data from the data we just extracted from the previous step. Next, we skip over the header using next(reader) and iterate through the remaining rows. Finally, we print out the year, mileage, and price, which correspond to the first, second, and third rows in the CSV, respectively.

#Process the data

data = response['Body'].read().decode('utf-8')

reader = csv.reader(io.StringlO(data))

next(reader)

for row in reader:

print(str.format("Year - {Year, Mileage - Price - {}", row[0], row[1], row[2]))After completing the trigger handler, we will deploy the trigger by clicking the “Deploy” button.

We’re now ready to test the functionality.

Generate a Test Event

To confirm our code is working as anticipated, you may want to create a test event and invoke it manually.

To create a test event that takes the same form of an s3 event trigger notification, you’ll want to select the s3-put template as seen below. By using the template, you get an example record that looks very close to the one your function will be invoked with when a file is created in S3.

At this point, we’re ready to test out our setup by uploading a file into S3.

Testing It Out

Head over to your S3 bucket, click on add files, select a CSV file, and click on upload.

After completing the upload, we’ll want to confirm the Lambda was triggered and our code ran successfully. Head over into Lambda Monitoring section to view invocation history. Do note that it can take a few minutes after uploading your file for the metrics to be populated.

To see our results faster, we can click on “View logs in CloudWatch” and look at the latest log stream at the top of the page.

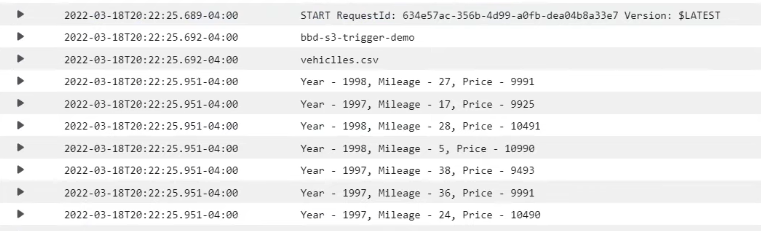

Clicking on the log stream reveals the Lambda’s execution logs. In our case, it shows the printed contents of the file that we uploaded into S3 as seen below.

Enjoy the article? You may enjoy the others:

- AWS S3 Core Concepts – The Things You Need To Know

- An Introduction to AWS Lambda

- AWS SNS to Lambda Step by Step Tutorial

Full Code Snippet

import json

import boto3

import csv

import io

s3Client = boto3.client('s3')

def lambda_handler(event, context):

#Get our bucket and file name

bucket = event['Records'][0]['s3']['bucket']['name']

key = event['ReoLjds'][0]['s3']['object']['key']

print(bucket)

print(key)

#Get our object

response = s3Client.get_object(Bucket=bucket, Key=key)

#Process it

data = response['Body'].read().decode('utf-8')

reader = csv.reader(io.StringlO(data))

next(reader)

for row in reader:

print(str.format("Year - {Year, Mileage - Price - {}", row[0], row[1], row[2]))

Can I get the loginneg user info at this lambda?